Implementing the Variant Agent: Part 1

Kicks off the Variant Agent implementation by preparing real sequencing data the agent can query. Walks through pulling a public Colombian family trio VCF, filtering to exome targets, and annotating variants with Illumina's Nirvana. Loads the annotated variants into DuckDB via SQLAlchemy to enable fast, flexible searches. Sets up the data foundation needed for the Variant Agent's search tool covered in the next post.

This post is a continuation of a series about how AI agents might aid in the diagnosis of rare diseases in genomics. See the previous three posts for additional context:

- Can an AI agent help diagnose genetic diseases?

- Implementing the HPO Agent

- Implementing the Gene Agent

Introduction

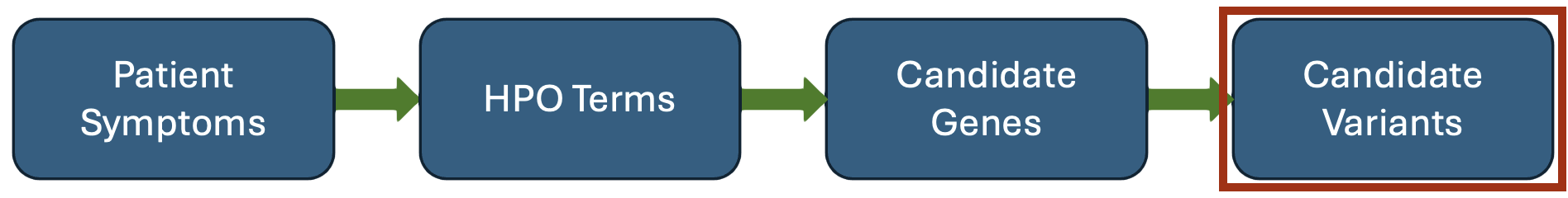

As the last agent in the workflow, the Variant Agent’s job is to search for potentially diagnostic variants within the ranked candidate genes provided by the Gene Agent, using a variant search tool.

The Variant Agent is the most technically complex of the three agents in the system, as it requires more extensive instructions than the other two agents and access to a more sophisticated variant search tool. This first post about the Variant Agent will describe the processing needed to take variants in a VCF file and get them annotated and loaded into a searchable database. The next post will continue with the implementation of the search tool and the agent itself.

Example data

In my original post introducing the AI agent system, I used my own genomic sequencing data to test out the system. To make it possible to follow along, I’ll be using publicly accessible genomic sequencing data in this post. These datasets are available from the International Genome Sample Resource.

I selected the Colombian family HG01466 (daughter), HG01464 (father), and HG01465 (mother). This trio is included in a large multi-sample VCF, so I used bcftools to stream each chromosome and select only the three samples, removing the extraneous INFO fields at the same time. I also filtered out any records where the proband (HG01466) had a reference genotype.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

set -e

set -o pipefail

for CHR in {1..22} X Y; do

CHR="chr${CHR}"

echo "Downloading VCF for chromosome $CHR..."

S3_URL="s3://1000genomes/1000G_2504_high_coverage/working/20201028_3202_raw_GT_with_annot/20201028_CCDG_14151_B01_GRM_WGS_2020-08-05_${CHR}.recalibrated_variants.vcf.gz"

bcftools view \

--threads 4 \

-s HG01466,HG01464,HG01465 \

-i 'GT[0] ~ "[1-9]"' \

-Ou \

$S3_URL \

| bcftools annotate \

-x INFO \

-Oz \

-o colombian_trio.${CHR}.vcf.gz \

--threads 2

done

Once all chromosomes were downloaded, I merged them into a single VCF. As this is whole-genome sequencing data, there are over 5 million variants in the VCF. To make things a bit more manageable, I decided to filter it down to something close to exome sequencing. I picked a common exome targets file (IDT’s xGen Exome Panel), expanded each target by 150bp on each side, and made a new VCF with the variants restricted to those expanded exome target regions. This reduced the number of variants to about 140,000.

Variant annotation

For the Variant Agent to perform its task, it needs to be able to query for variants using a number of criteria, such as:

- Affected gene

- Consequence (stop gain, frameshift, missense, etc.)

- Population frequency (gnomAD)

- ClinVar classification

To obtain these annotations for each variant, I decided to use Illumina’s Nirvana variant annotation tool. While the open-source version is no longer maintained, it can still run with slightly outdated annotation resources. Nirvana is both fast and has a well-defined JSON output format, which made it much easier to load the annotations into a database for searching.

Annotating a VCF with Nirvana first requires you to download the annotation data. Unfortunately, Nirvana does not natively execute on Apple Silicon systems, so I used an Amazon EC2 instance to perform the annotation steps:

1

2

3

4

5

6

7

8

9

10

11

# Download GRCh38 annotation data

mkdir /scratch/Data

dotnet Downloader.dll --ga GRCh38 -o /scratch/Data

# Annotate a VCF

dotnet Nirvana.dll \

-c /scratch/Data/Cache/GRCh38/Both \

-r /scratch/Data/References/Homo_sapiens.GRCh38.Nirvana.dat \

--sd /scratch/Data/SupplementaryAnnotation/GRCh38 \

-i colombian_trio.exome.vcf.gz \

-o colombian_trio.exome

The above will produce colombian_trio.exome.json.gz, a compressed JSON in Nirvana’s output format. The first variant in the file looks like this (I deleted the gnomAD subpopulation entries to save space):

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

{

"chromosome": "chr1",

"position": 69270,

"refAllele": "A",

"altAlleles": [

"G"

],

"quality": 464400,

"filters": [

"VQSRTrancheSNP99.80to100.00"

],

"cytogeneticBand": "1p36.33",

"samples": [

{

"genotype": "1/1",

"variantFrequencies": [

1

],

"totalDepth": 6,

"genotypeQuality": 18,

"alleleDepths": [

0,

6

]

},

{

"genotype": "1/1",

"variantFrequencies": [

1

],

"totalDepth": 3,

"genotypeQuality": 9,

"alleleDepths": [

0,

3

]

},

{

"genotype": "1/1",

"variantFrequencies": [

1

],

"totalDepth": 9,

"genotypeQuality": 27,

"alleleDepths": [

0,

9

]

}

],

"variants": [

{

"vid": "1-69270-A-G",

"chromosome": "chr1",

"begin": 69270,

"end": 69270,

"refAllele": "A",

"altAllele": "G",

"variantType": "SNV",

"hgvsg": "NC_000001.11:g.69270A>G",

"phylopScore": 0.8,

"regulatoryRegions": [

{

"id": "ENSR00000000012",

"type": "open_chromatin_region",

"consequence": [

"regulatory_region_variant"

]

}

],

"dbsnp": [

"rs201219564"

],

"gmeVariome": {

"allAc": 518,

"allAn": 742,

"allAf": 0.6981

},

"gnomad": {

"coverage": 29,

"failedFilter": true,

"allAf": 0.62857

},

"gnomad-exome": {

"coverage": 29,

"failedFilter": true,

"allAf": 0.878137

},

"topmed": {

"allAf": 0.274592,

"allAn": 125568,

"allAc": 34480,

"allHc": 11,

"failedFilter": true

},

"dannScore": 0.58,

"gerpScore": -2.89,

"transcripts": [

{

"transcript": "ENST00000641515.1",

"source": "Ensembl",

"bioType": "protein_coding",

"codons": "tcA/tcG",

"aminoAcids": "S",

"cdnaPos": "303",

"cdsPos": "180",

"exons": "3/3",

"proteinPos": "60",

"geneId": "ENSG00000186092",

"hgnc": "OR4F5",

"consequence": [

"synonymous_variant"

],

"hgvsc": "ENST00000641515.1:c.180A>G",

"hgvsp": "ENST00000641515.1:c.180A>G(p.(Ser60=))",

"isCanonical": true,

"proteinId": "ENSP00000493376.1"

},

{

"transcript": "ENST00000335137.4",

"source": "Ensembl",

"bioType": "protein_coding",

"codons": "tcA/tcG",

"aminoAcids": "S",

"cdnaPos": "216",

"cdsPos": "180",

"exons": "1/1",

"proteinPos": "60",

"geneId": "ENSG00000186092",

"hgnc": "OR4F5",

"consequence": [

"synonymous_variant"

],

"hgvsc": "ENST00000335137.4:c.180A>G",

"hgvsp": "ENST00000335137.4:c.180A>G(p.(Ser60=))",

"proteinId": "ENSP00000334393.3"

},

{

"transcript": "NM_001005484.1",

"source": "RefSeq",

"bioType": "protein_coding",

"codons": "tcA/tcG",

"aminoAcids": "S",

"cdnaPos": "180",

"cdsPos": "180",

"exons": "1/1",

"proteinPos": "60",

"geneId": "79501",

"hgnc": "OR4F5",

"consequence": [

"synonymous_variant"

],

"hgvsc": "NM_001005484.1:c.180A>G",

"hgvsp": "NM_001005484.1:c.180A>G(p.(Ser60=))",

"isCanonical": true,

"proteinId": "NP_001005484.1"

}

]

}

]

}

While Nirvana’s JSON format does have a proprietary index that allows for rapid querying by genomic position (similar to tabix), we need more than just position-based searching. To accommodate all the required search criteria, we will need to insert all of these variants into a database.

Building a variant database

I chose DuckDB for the variant database because it’s an in-process database engine that stores its data in a single file and delivers excellent query performance.

The first step is to define our schema. I decided on this fairly minimal set of columns to store, along with the full JSON of the variant entry so we can retrieve additional annotation data later if we want. It covers all the query parameters we’ll later provide to the Variant Agent via the variant search tool:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

CREATE TABLE IF NOT EXISTS variants (

vid VARCHAR NOT NULL,

chromosome VARCHAR NOT NULL,

variant_index INTEGER NOT NULL,

position INTEGER NOT NULL,

quality DOUBLE NOT NULL,

begin_pos INTEGER NOT NULL,

end_pos INTEGER NOT NULL,

ref_allele VARCHAR NOT NULL,

alt_allele VARCHAR NOT NULL,

genotype VARCHAR,

genotype_quality DOUBLE,

total_depth INTEGER,

allele_depths INTEGER[],

maternal_genotype VARCHAR,

paternal_genotype VARCHAR,

variant_type VARCHAR,

gene_symbols VARCHAR[],

canonical_transcripts VARCHAR[],

transcript_consequences VARCHAR[],

gnomad_af DOUBLE,

clinvar_classifications VARCHAR[],

raw JSON NOT NULL,

PRIMARY KEY (vid, chromosome)

);

CREATE INDEX IF NOT EXISTS v_chr_begin_idx ON variants(chromosome, begin_pos);

CREATE INDEX IF NOT EXISTS v_gnomad_af_idx ON variants(gnomad_af);

Loading the variants into the database requires that we parse each Nirvana position entry, enumerate each variant present, and insert the relevant annotation data into the variants table. We also want to perform the insertions in batches to avoid reading the entire JSON file in memory. To accomplish this, we can use the Python ijson package to stream the JSON position entries from the compressed JSON file.

I wrote the Python script below to perform the variant loading. Additionally, it creates three mapping tables to make searching the gene_symbols, transcript_consequences, and clinvar_classifications columns easier. While DuckDB has a few native methods for efficiently querying list columns, I plan to use SQLAlchemy for the variant query tool and these mapping columns will make the implementation easier.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

31

32

33

34

35

36

37

38

39

40

41

42

43

44

45

46

47

48

49

50

51

52

53

54

55

56

57

58

59

60

61

62

63

64

65

66

67

68

69

70

71

72

73

74

75

76

77

78

79

80

81

82

83

84

85

86

87

88

89

90

91

92

93

94

95

96

97

98

99

100

101

102

103

104

105

106

107

108

109

110

111

112

113

114

115

116

117

118

119

120

121

122

123

124

125

126

127

128

129

130

131

132

133

134

135

136

137

138

139

140

141

142

143

144

145

146

147

148

149

150

151

152

153

154

155

156

157

158

159

160

161

162

163

164

165

166

167

168

169

170

171

172

173

174

175

176

177

178

179

180

181

182

183

184

185

186

187

188

189

190

191

192

193

194

195

196

197

198

199

200

201

202

203

204

205

206

207

208

209

210

211

212

213

214

215

216

217

218

219

220

221

222

223

224

225

226

227

228

229

230

231

232

233

234

235

236

237

238

239

240

241

242

243

244

245

246

247

248

249

250

251

252

253

254

255

256

257

258

259

260

261

262

263

264

265

266

267

268

269

270

271

272

273

274

275

276

277

278

279

280

281

282

283

284

285

286

287

288

289

290

291

292

293

294

295

296

297

298

299

300

301

302

303

304

305

306

307

import argparse

import gzip

import json

from decimal import Decimal

from typing import Any, Dict, Iterable, List

import ijson

import duckdb

def decimal_default(obj: Any):

"""JSON serializer for Decimal types."""

if isinstance(obj, Decimal):

return float(obj)

raise TypeError

def stream_positions(json_gz_path: str) -> Iterable[Dict[str, Any]]:

"""

Stream position objects from a gzipped JSON file.

"""

with gzip.open(json_gz_path, "rb") as f:

yield from ijson.items(f, "positions.item")

def create_variant_table(conn: duckdb.DuckDBPyConnection):

"""Ensure the variant table exists with the appropriate schema."""

conn.execute(

"""

CREATE TABLE IF NOT EXISTS variants (

vid VARCHAR NOT NULL,

chromosome VARCHAR NOT NULL,

variant_index INTEGER NOT NULL,

position INTEGER NOT NULL,

quality DOUBLE NOT NULL,

begin_pos INTEGER NOT NULL,

end_pos INTEGER NOT NULL,

ref_allele VARCHAR NOT NULL,

alt_allele VARCHAR NOT NULL,

genotype VARCHAR,

genotype_quality DOUBLE,

total_depth INTEGER,

allele_depths INTEGER[],

maternal_genotype VARCHAR,

paternal_genotype VARCHAR,

variant_type VARCHAR,

gene_symbols VARCHAR[],

canonical_transcripts VARCHAR[],

transcript_consequences VARCHAR[],

gnomad_af DOUBLE,

clinvar_classifications VARCHAR[],

raw JSON NOT NULL,

PRIMARY KEY (vid, chromosome)

);

CREATE INDEX IF NOT EXISTS v_chr_begin_idx ON variants(chromosome, begin_pos);

CREATE INDEX IF NOT EXISTS v_gnomad_af_idx ON variants(gnomad_af);

"""

)

def create_mapping_tables(conn: duckdb.DuckDBPyConnection):

"""Create mapping tables for variants."""

conn.execute(

"""

DROP TABLE IF EXISTS variant_genes;

CREATE TABLE variant_genes AS

SELECT

vid,

chromosome,

UNNEST(gene_symbols) AS gene_symbol

FROM variants

WHERE gene_symbols IS NOT NULL;

CREATE INDEX vg_gene_idx ON variant_genes(gene_symbol);

CREATE INDEX vg_vid_chr_idx ON variant_genes(vid, chromosome);

DROP TABLE IF EXISTS variant_consequences;

CREATE TABLE variant_consequences AS

SELECT

vid,

chromosome,

UNNEST(transcript_consequences) AS consequence

FROM variants

WHERE transcript_consequences IS NOT NULL;

CREATE INDEX vtc_consequence_idx ON variant_consequences(consequence);

CREATE INDEX vtc_vid_chr_idx ON variant_consequences(vid, chromosome);

DROP TABLE IF EXISTS clinvar_classifications;

CREATE TABLE clinvar_classifications AS

SELECT

vid,

chromosome,

UNNEST(clinvar_classifications) AS classification

FROM variants

WHERE clinvar_classifications IS NOT NULL;

CREATE INDEX vcc_classification_idx ON clinvar_classifications(classification);

CREATE INDEX vcc_vid_chr_idx ON clinvar_classifications(vid, chromosome);

"""

)

def batch_insert(

conn: duckdb.DuckDBPyConnection, rows: List[tuple], columns: List[str]

):

"""Insert a batch of rows into the variants table."""

placeholders = ", ".join("?" for _ in columns)

conn.executemany(

f"INSERT INTO variants ({', '.join(columns)}) VALUES ({placeholders})", rows

)

def build_variant_record(

variant: Dict[str, Any], variant_index: int, position: Dict[str, Any]

) -> Dict[str, Any]:

"""

Build a variant record by combining variant and position information.

"""

chromosome = position.get("chromosome")

vid = variant.get("vid")

vid_position = position.get("position")

quality = position.get("quality")

begin_pos = variant.get("begin")

end_pos = variant.get("end")

ref_allele = variant.get("refAllele")

alt_allele = variant.get("altAllele")

# Samples:

# [0] = proband,

# [1] = paternal (if present)

# [2] = maternal (if present)

samples = position.get("samples", [])

proband = samples[0] if len(samples) > 0 else {}

paternal = samples[1] if len(samples) > 1 else {}

maternal = samples[2] if len(samples) > 2 else {}

genotype = proband.get("genotype")

if isinstance(genotype, str):

genotype = genotype.replace("|", "/").replace(".", "0")

genotype_quality = proband.get("genotypeQuality")

total_depth = proband.get("totalDepth")

allele_depths = proband.get("alleleDepths", [])

if not isinstance(allele_depths, list):

allele_depths = []

paternal_genotype = paternal.get("genotype")

maternal_genotype = maternal.get("genotype")

if isinstance(paternal_genotype, str):

paternal_genotype = paternal_genotype.replace("|", "/").replace(".", "0")

if isinstance(maternal_genotype, str):

maternal_genotype = maternal_genotype.replace("|", "/").replace(".", "0")

variant_type = variant.get("variantType")

# Transcripts: collect gene symbols, canonical transcripts, and flat consequences

transcripts = variant.get("transcripts", [])

gene_names = set()

canonical_txs = set()

consequences = set()

for transcript in transcripts:

gs = transcript.get("hgnc")

if gs:

gene_names.add(str(gs))

is_canonical = transcript.get("isCanonical")

if is_canonical:

canonical_txs.add(transcript.get("transcript"))

consequences.update(transcript.get("consequence", []))

gnomad_af = variant.get("gnomad", {}).get("allAf")

if gnomad_af is None:

gnomad_af = 0.0

clinvar_classifications = set()

for clinvar_entry in variant.get("clinvar-preview", []):

entry_classification = (

clinvar_entry.get("classifications", {})

.get("germlineClassification", {})

.get("classification")

)

if entry_classification:

clinvar_classifications.add(entry_classification)

# Raw variant JSON text

raw_json_text = json.dumps(variant, default=decimal_default)

return {

"chromosome": chromosome,

"vid": vid,

"variant_index": variant_index,

"position": int(vid_position) if vid_position is not None else None,

"quality": float(quality) if quality is not None else None,

"begin_pos": int(begin_pos) if begin_pos is not None else None,

"end_pos": int(end_pos) if end_pos is not None else None,

"ref_allele": ref_allele,

"alt_allele": alt_allele,

"genotype": genotype,

"genotype_quality": (

float(genotype_quality) if genotype_quality is not None else None

),

"total_depth": int(total_depth) if total_depth is not None else None,

"allele_depths": [int(x) for x in allele_depths if isinstance(x, (int, float))],

"paternal_genotype": paternal_genotype,

"maternal_genotype": maternal_genotype,

"variant_type": variant_type,

"gene_symbols": list(gene_names),

"canonical_transcripts": list(canonical_txs),

"transcript_consequences": list(consequences),

"gnomad_af": gnomad_af,

"clinvar_classifications": list(clinvar_classifications),

"raw": raw_json_text,

}

def main():

"""Main function"""

parser = argparse.ArgumentParser(description=__doc__)

parser.add_argument(

"-j",

"--json",

required=True,

help="Path to gzipped Nirvana JSON (e.g., *.json.gz)",

)

parser.add_argument(

"-d",

"--db",

required=True,

help="DuckDB database file",

)

args = parser.parse_args()

conn = duckdb.connect(args.db)

create_variant_table(conn)

total = 0

batch = []

batch_size = 5000

seen = set()

cols = [

"chromosome",

"vid",

"variant_index",

"position",

"quality",

"begin_pos",

"end_pos",

"ref_allele",

"alt_allele",

"genotype",

"genotype_quality",

"total_depth",

"allele_depths",

"paternal_genotype",

"maternal_genotype",

"variant_type",

"gene_symbols",

"canonical_transcripts",

"transcript_consequences",

"gnomad_af",

"clinvar_classifications",

"raw",

]

conn.execute("BEGIN;")

try:

for position in stream_positions(args.json):

for variant_index in range(len(position.get("variants", []))):

variant = position["variants"][variant_index]

key = variant.get("vid")

if key in seen:

continue

seen.add(key)

record = build_variant_record(variant, variant_index, position)

row_tuple = tuple(record[c] for c in cols)

batch.append(row_tuple)

if len(batch) >= batch_size:

batch_insert(conn, batch, cols)

total += len(batch)

print(f"Inserted {total:,} records...")

batch.clear()

if batch:

batch_insert(conn, batch, cols)

total += len(batch)

batch.clear()

conn.execute("COMMIT;")

except Exception:

conn.execute("ROLLBACK;")

raise

print(f"Total processed variants: {total:,}")

create_mapping_tables(conn)

conn.close()

print("Done.")

if __name__ == "__main__":

main()

Running the script will produce a DuckDB database file with the variants table populated:

1

2

3

python load_variants.py \

--db colombian_trio.exome.duckdb \

--json colombian_trio.exome.json.gz

Querying the variant database

With the variants loaded into the database, we can now perform some example queries in preparation for building the variant search tool. First, we want to be able to search by gene symbols. As I mentioned previously, I plan to use SQLAlchemy for querying the database, so we’ll set that up:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

import warnings

import json

from sqlalchemy import (

create_engine,

MetaData,

Table,

select,

exc as sa_exc,

)

from duckdb_engine import DuckDBEngineWarning

import pandas as pd

warnings.simplefilter("ignore", sa_exc.SAWarning)

warnings.simplefilter("ignore", DuckDBEngineWarning)

engine = create_engine("duckdb:///colombian_trio.exome.duckdb")

conn = engine.connect()

md = MetaData()

v = Table("variants", md, autoload_with=conn)

vg = Table("variant_genes", md, autoload_with=conn)

vc = Table("variant_consequences", md, autoload_with=conn)

cc = Table("clinvar_classifications", md, autoload_with=conn)

Querying based on a maximum gnomAD frequency is simplest, as we can directly reference the value in our WHERE clause:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

gnomad_query = (

select(

v.c.chromosome,

v.c.position,

v.c.ref_allele,

v.c.alt_allele,

v.c.genotype,

v.c.gnomad_af,

)

.where(v.c.gnomad_af < 0.05)

.limit(5)

)

results = pd.read_sql_query(gnomad_query, conn)

print(results.to_string(index=False))

Output:

1

2

3

4

5

6

chromosome position ref_allele alt_allele genotype gnomad_af

chr1 69849 G A 0/1 0.040920

chr1 931131 CCCT - 0/1 0.000758

chr1 935954 G A 0/1 0.006587

chr1 942335 C A 1/1 0.000007

chr1 943526 TT - 1/3 0.023910

Searching by gene symbol efficiently involves a join between the variants and variant_genes tables, which would look like this:

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

gene_query = (

select(

v.c.chromosome,

v.c.position,

v.c.ref_allele,

v.c.alt_allele,

v.c.genotype,

v.c.gene_symbols

)

.distinct()

.join(vg, (vg.c.vid == v.c.vid) & (vg.c.chromosome == v.c.chromosome))

.where(vg.c.gene_symbol == "MECP2")

)

results = pd.read_sql_query(gene_query, conn)

print(results)

Output:

1

2

3

chromosome position ref_allele alt_allele genotype gene_symbols

chrX 154018741 A G 1/1 [IRAK1, MIR718, MECP2]

chrX 154019032 G A 1/1 [IRAK1, MIR718, MECP2]

Queries for the other list columns with mapping tables follow the same format.

What’s next

With this post, we’ve laid all the groundwork needed to build out the Variant Agent. This included obtaining an example VCF for a trio, annotating the VCF using Nirvana, importing the resulting annotations into a searchable database, and performing some example searches using SQLAlchemy.

In the next post, we’ll build the variant search tool and define the Variant Agent’s input and instructions.