Building a chat interface for your inbox

Extends the MCP concept with a Fastmail-specific server that requires both a bearer token and user-provided API token for mailbox access. Details how to run the server, expose it via Cloudflare, and test it with OpenAI's remote MCP support. Introduces a small chat web app that uses the MCP tools so a model can search messages, retrieve content, and answer inbox questions. Covers security choices, demo flows, and ideas for future iterations of the email assistant.

Introduction

Wouldn’t it be great if you could ask an AI model questions that you know are answered somewhere in your email, and have it go find the answer? I think so! In my previous post introducing MCP, I explored how to provide tool access to an LLM using OpenAI’s remote MCP support. I showed a simple proof-of-concept MCP server that provided access to a small set of generated emails to demonstrate the utility of an LLM with email access.

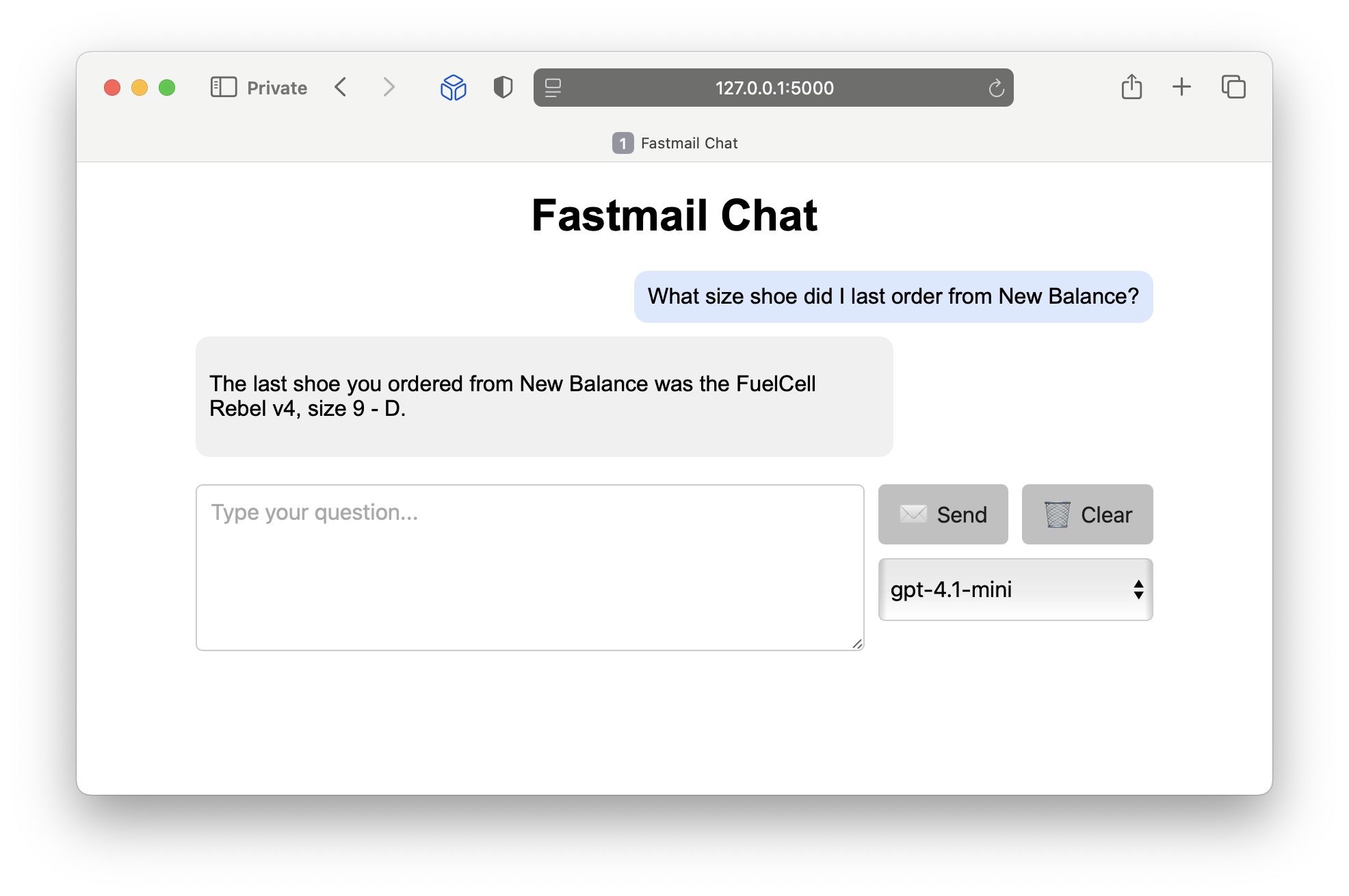

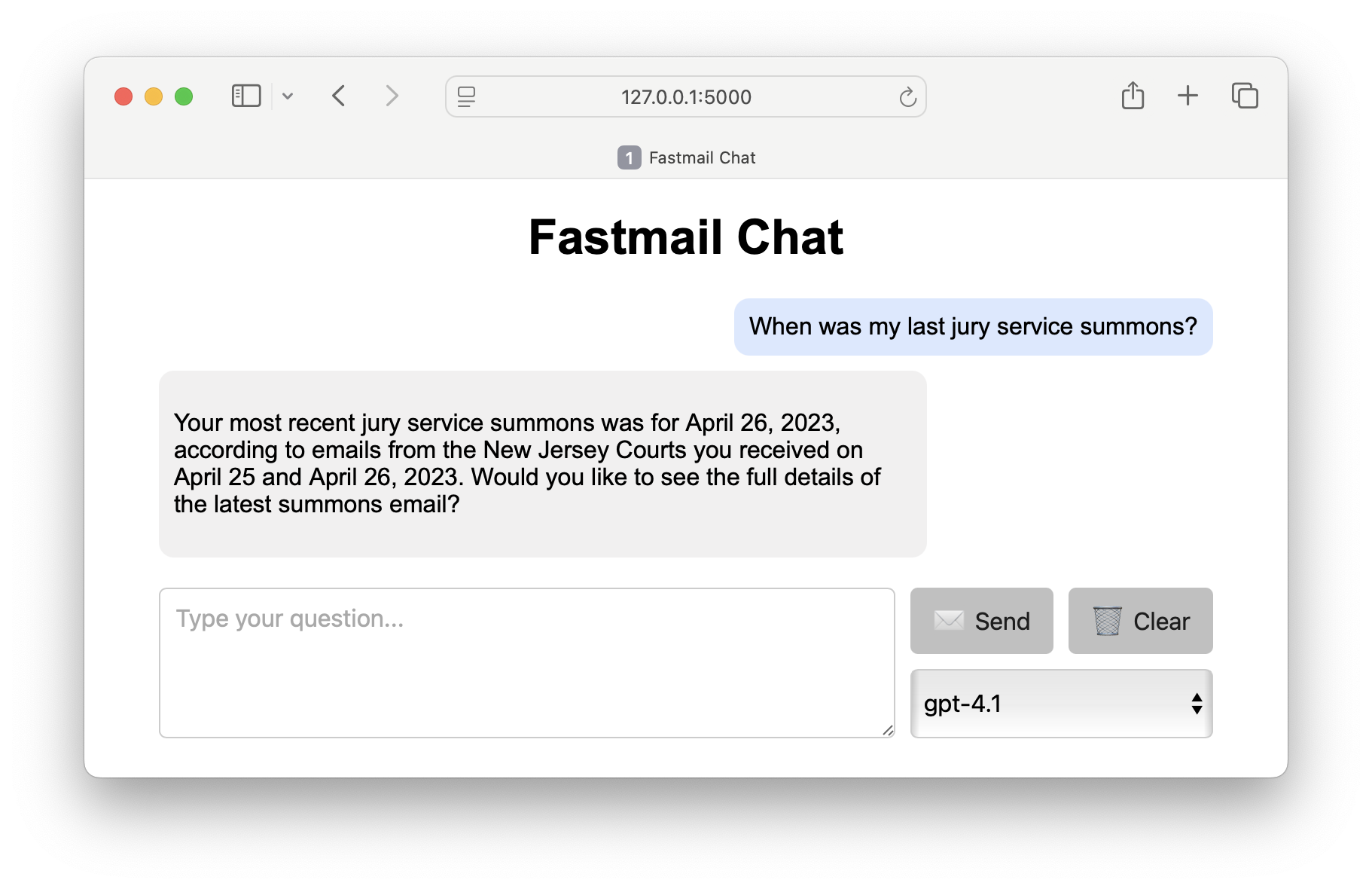

In this post, I’ll describe my Fastmail MCP server, which provides some basic tools for accessing a Fastmail mailbox. Then I’ll introduce a small web app that displays a chat interface powered by OpenAI and the Fastmail MCP server, allowing one to prompt a model with questions that require it to look up relevant messages:

The Fastmail MCP server

To build an MCP server that can interact with a Fastmail mailbox, I had to first think about how I’d programmatically access messages in the mailbox. I could use a standard email protocol like IMAP, but Fastmail supports (and developed) a better standard called JMAP. Fortunately, there’s a Python JMAP client called jmapc that has all the features I wanted - namely listing emails, searching emails, and retrieving the content of emails.

Fastmail allows users to generate an API token that can be used to authenticate and access a specific mailbox. I designed the MCP server so that it requires such a token to be provided by the client - this way the server doesn’t need to store any authentication itself and simply passes along the token when using the jmapc package.

I also wanted to add an additional layer of security so that only authorized users could send requests to the server, and not just anyone who knew its URL and had a valid Fastmail API token. To do that, I added a bearer token requirement to the MCP server. The bearer token is a random password assigned by the MCP server operator, and like the Fastmail API key it must be provided by the client.

My MCP server (as of now) exposes 3 tools:

list_inbox_emails: Lists basic details of messages in the inbox (id, sender, subject, and date). A maximum of 30 messages are sent per tool call, with anoffsetparameter allowing the client to select additional messages beyond the first 30.query_emails_by_keyword: Returns a list of messages that match a provided keyword. Like the tool above, it also sends up to 30 messages at a time and provides the sameoffsetpaging feature.get_email_content: Returns the content of a single email, converted into plain text with a maximum size of 1MB.

As you’ll see in the Fastmail Chat demonstration below, these three tools provide enough utility to allow LLMs to answer a wide range of queries.

Running the server

Thanks to the cloudflared tool I mentioned in the previous post, getting the server running and accessible to OpenAI’s servers is quite simple. First, launch the server:

1

2

3

4

5

6

7

git clone https://github.com/jeffjjohnston/fastmail-mcp-server.git

cd fastmail-mcp-server

python3.12 -m venv .venv

source .venv/bin/activate

pip install -r requirements

export BEARER_TOKEN="<YOUR-SECRET-TOKEN>"

python server.py

Next, in a separate shell, request a tunnel for the server:

1

cloudflared tunnel --url http://127.0.0.1:8000

Take note of the Cloudflare-assigned URL for configuring the client below.

Testing with OpenAI

To test the server with OpenAI’s remote MCP support, we need to provide three pieces of information in the API call:

- The URL of the MCP server. This is the Cloudflare URL from the

cloudflared tunnelcommand. - The bearer token you assigned to the MCP server

- A valid Fastmail API token

You can generate a Fastmail API token from within Fastmail by navigating to Settings -> Privacy & Security -> Connected apps & API tokens. As the MCP server has no features that modify your mailbox, I suggest you generate a token with read-only access.

1

2

3

4

5

6

7

8

9

10

11

12

13

14

15

16

17

18

19

20

21

22

23

24

25

26

27

28

29

30

from openai import OpenAI

from dotenv import load_dotenv

load_dotenv()

client = OpenAI()

FASTMAIL_MCP_URL = "https://abc-def-ghijkl-uri.trycloudflare.com/mcp/"

FASTMAIL_MCP_BEARER_TOKEN = "<YOUR-SECRET-TOKEN>"

FASTMAIL_API_TOKEN = "<YOUR-FASTMAIL-API-TOKEN>"

resp = client.responses.create(

model="gpt-4o",

tools=[

{

"type": "mcp",

"server_label": "Email",

"server_url": "https://abc-def-ghijkl-uri.trycloudflare.com/mcp/",

"require_approval": "never",

"headers": {

"Authorization": f"Bearer {FASTMAIL_MCP_BEARER_TOKEN}",

"fastmail-api-token": FASTMAIL_API_TOKEN,

},

},

],

input="What's the tracking number from my Amazing Company order email?",

instructions="Respond without formatting.",

)

print(resp.output_text)

That’s it - the model will choose which tools, if any, to use to answer your query.

Fastmail Chat

Now that the MCP server is running and accessible to OpenAI, we can use my Fastmail Chat app to interact with it using a simple chat interface. Since the MCP server allows the OpenAI model to list my inbox messages, search for messages, and retrieve message contents, it can help me quickly find information from my email:

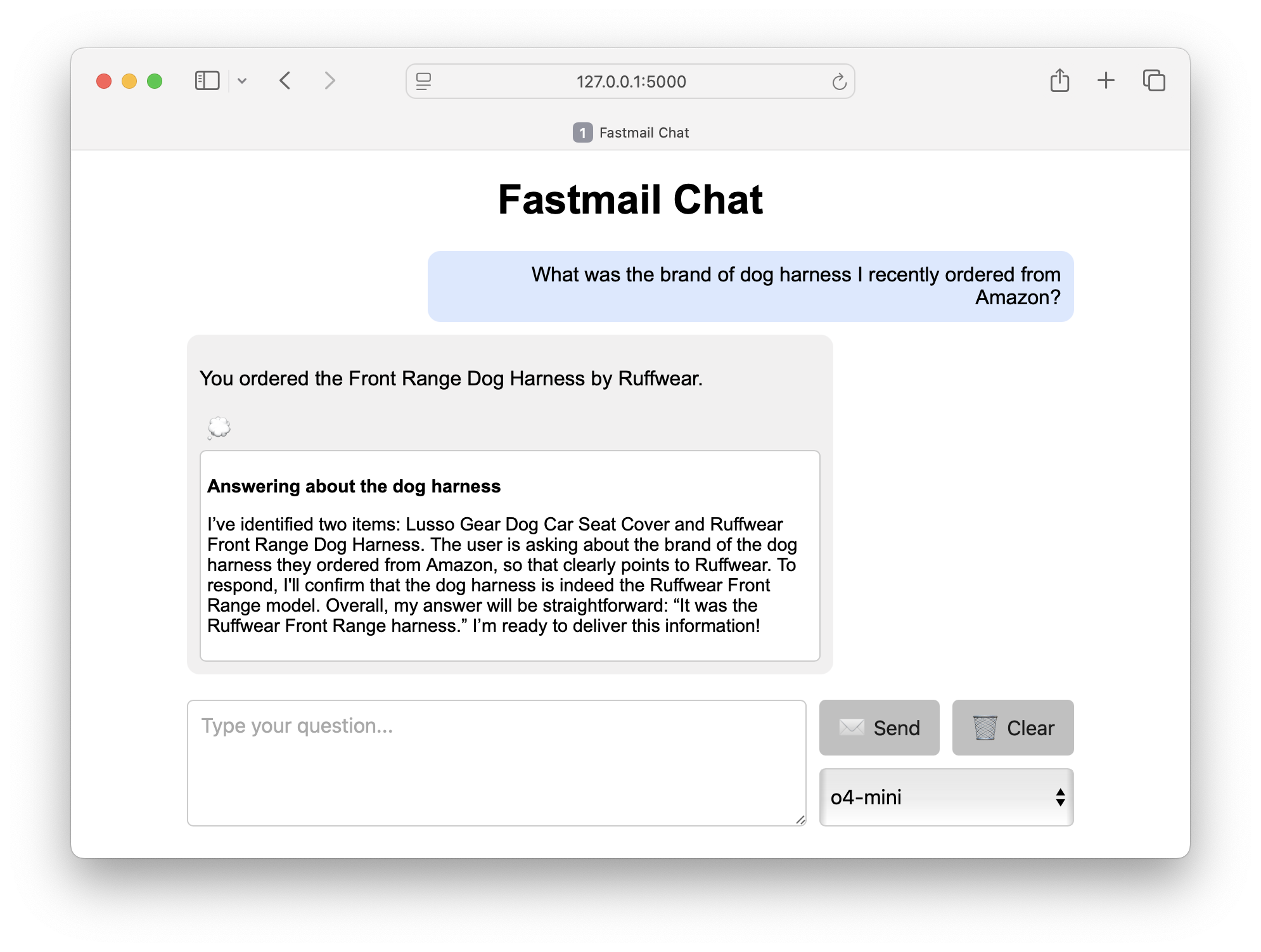

I added a model selection drop-down, and even exposed the reasoning summary for the o-series models. Click the “thinking icon” to reveal the summary:

A note about security

The Fastmail Chat app has no inherit security, so if you host it on anything other than the localhost address (127.0.0.1), you run the risk of unauthorized use by anyone who can reach the address it is listening on. There are a few approaches you can take to secure the app so it can be accessed by other devices, such as your phone’s browser:

-

Use a Cloudflare Application + Tunnel: This let’s you have Cloudflare manage authentication while the app runs on localhost and is only accessed through a Cloudflare tunnel.

-

Set up a reverse proxy with basic authentication: Nginx can be configured with basic authentication and proxy requests to your app. You can add a free Let’s Encrypt SSL cert as well.